Test Lab Update – January 2015

In October 2014, I wrote an article about what I was looking to build in my new home test lab. Since then I have been hard at work setting it up and I have made a number of design changes since then. Here is an update on my progress…

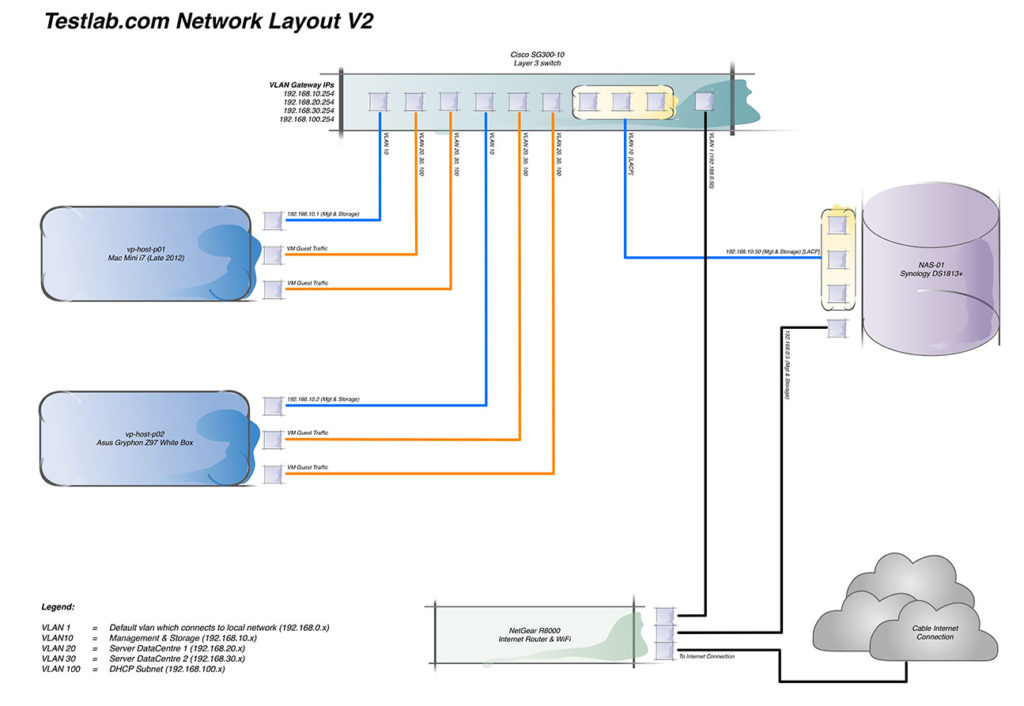

Updated Network Diagram

Since October, I have bought some new hardware and have also changed my mind on the hardware I was originally going to use. Originally, I was looking at using 2 x Mac Mini Core i7 (Late 2012) with 16GB RAM as my two test lab hosts, however after I purchased my first Mac Mini and realised that even with 16GB RAM I can only have around 6 or 7 usable VMs on the Mac Mini at one time. For this reason, I decided against purchasing a second Mac Mini and rather built my own VMware ESXi White Box machine.

I have updated my network diagram to reflect all of the changes I have made, including the upgraded of my internet router to a NetGear R8000:

ESXi White Box

In addition to being able to use 32GB RAM and a much faster and more energy efficient CPU, my white box is also used for a second purpose…. the occasional gaming machine. I ended up building VMware ESXi 5.5 on a USB Flash Drive and then installed Windows 8.1 on the SSD so that I can dual boot when required.

Before getting into the details of the build I thought I would document my requirements:

- Smallest form factor possible

- Lowest power consumption possible

- As quiet as possible

- Minimum 32GB of memory

- Ability to install a full-sized graphics card

- At least 3 x Gigabit Ethernet NICs

- Be able to dual boot between ESXi and Windows

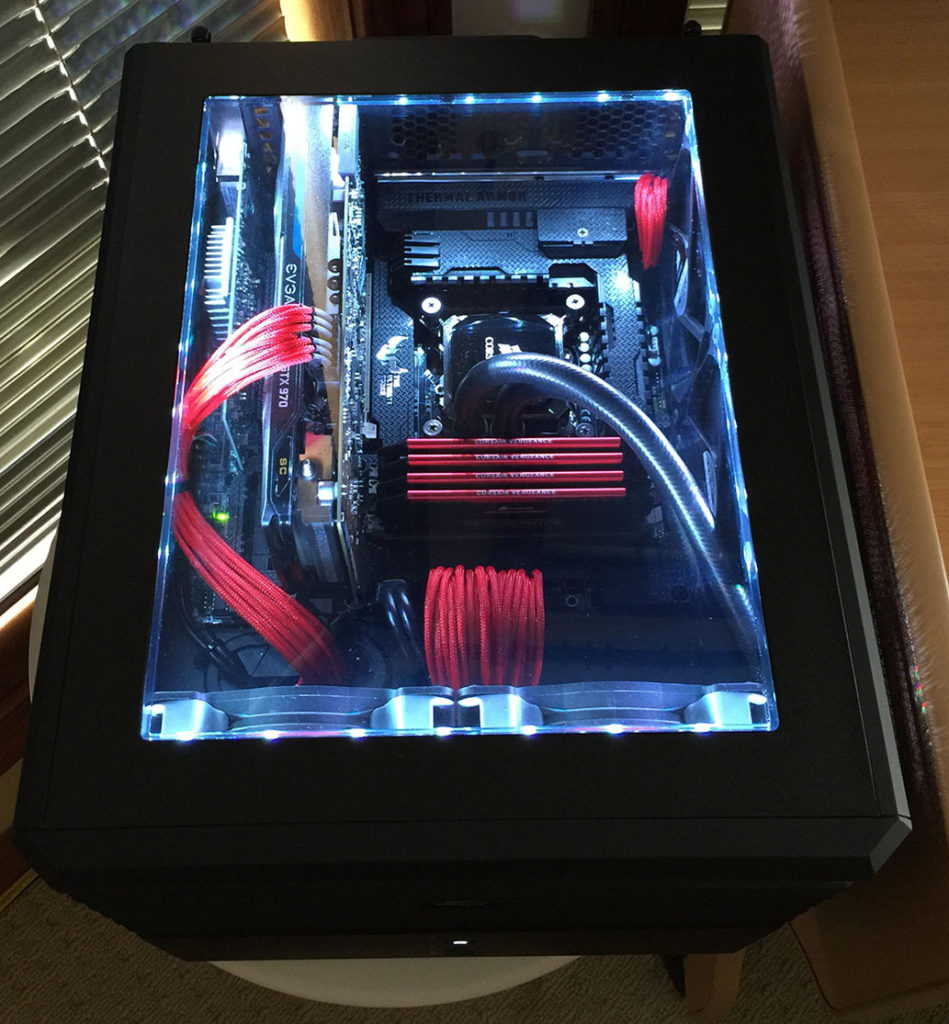

- Has to look awesome

After much research, I decided to go with a micro-ATX sized machine that supports a full-sized GPU and a closed loop water cooling block (for quiet and efficient cooling). I also added an Intel quad port Gigabit NIC to be used in my VMware environment. Here are all of the specs of my ESXi White Box:

- Corsair Carbide Air 240 Black Case

- ASUS Gryphon Z97 Motherboard

- ASUS Gryphon Z97 Amour Kit

- Intel Core i7 4790K 4.0Ghz Quad Core CPU

- Corsair 32GB DDR3 1600 Vengeance Pro Red

- Samsung 840 EVO 250GB SSD

- EVGA GTX 970 SuperClocked ACX 2.0

- Intel Quad Port Gigabit Ethernet Controller

- Corsair H100i CPU Water Cooler

- Corsair SP120 Quiet 120mm Fans x 2

- Corsair AF120 Quiet 120mm Fans x 2

- Corsair HX750i Platinum Power Supply

- Corsair Link Commander Mini

- Corsair Link RGB LED Lighting Kit x 2

- Corsair Sleeved Modular PSU Cable Kit Red

- Corsair Sleeved Modular ATX Cable Red

I am not going explain the reason why I used each component, however if you are interested in the selection of a particular component, then send me a comment below.

Here is what the final build looks like:

Server Rack

My other major upgrade was I a 12RU server rack which contains all of my test lab and most of my home network components. The only reason why I did this was so that my test lab was organised and clean, especially the network cables as they can get messy really quickly.

In addition to the rack, I colour coded my network cables – yellow for test lab, black for internal network. I am not going to go into too much detail, but I will say that the server rack is awesome, not only does it look really good but it keeps it dust free and relatively cool too.

Here is what my current test lab looks like:

In addition to building and configuring the physical components of my test lab, I have completed my VMware vSphere 5.5 install, using distributed switches and host profiles. I did have a few issues (which have and will continue to document), however I can report all is working well and now with the additional resources am able to test heaps of new things!!

If you have any questions or comments, let me know below.

Thanks

Luca

How were you able to load ESXi 5.5 onto your white box? I have the same motherboard but having issues with the ESXi installation seeing the Hard drives. I even have the 120gb version of the same HD you have. Modified the multiple settings for HD and turned off secured boot with no luck.

Thank you,

-Manuel

I Manuel,

I also had some issues getting it running, however I did get it running in the end. I installed it on a bootable USB stick, however I could see my hard drive during the installation. I was actually going to write up an article on how to install ESXi 5.5 on the Asus Gryphon Z97, so I will do that for your within the next couple of days.

Thanks

Luca

Have a try later version of ESXi it’s possible that the driver was added now

Hello Luca,

Thanks for all the valuable information you provide on your blog. I regularly follow your blog and learn stuff which is greatly appreciated.

I want to check one thing I have lenovo NAS device and it is a 4 bay but does not support SSD. The HDD i can put in will be only SATA.

i have SATA HDD on my local esxi host and got my XA 76 lab on it but it is just too slow to boot up VM’S.

So if i get the HDD’s for my NAS device do you think it will be same performance?

Also I have got two NIC’S for my esxi host, one id connected to the LAN and the other one is for DMZ.

can you use one of them to configure vmotion as i’m limited when it comes to NICS?

Appreciated your help Luca.

Thanks,

Pavan

Hi Pavan,

I am glad that my articles have been of help, its always good to hear that.

I am not sure what model NAS you have and what options you have available, however if you install 3 or 4 SATA disks in your NAS you could create a RAID 5 or even a RAID 10 volume. You can then present this as one or more datastores (LUNs) to vSphere. Using RAID 5 or 10 will improve performance over using just a single disk. Before going down that path, I would do some investigation into what is actually causing the performance issues as it could be CPU or memory usage on the host or even not enough memory of vCPUs assigned to the VM (or even too many possibly – although this doesn’t sound like the case if you are experiencing slow boot times).

You can check this performance information by click on the host in vCenter and then selecting the Performance tab. From here you can check disk, CPU and memory performance. This might give you a better indication if it is a storage issue (although this does sound like it is the most likely reason).

In regards to your host, I am not sure on your underlying network configuration so it is hard to comment, however you could use one NIC for management and vMotion traffic and the other for virtual machine traffic, which would include DMZ traffic as I am assuming this is just another VLAN with a firewall segregating it from internal VLANs. If this is the case, just tag all of the VLANs you required for VM traffic to the second NIC (including your DMZ VLAN) and then create the appropriate port groups for each VM network. You can then deploy VMs on the internal VLANs or on the DMZ VLAN.

Hope this helps.

Luca

Thanks Luca.

The NAS which i have with me is below:

http://www.lenovo.com/images/products/server/pdfs/datasheets/lenovoiomega_ix2_data_sheet_q12013.pdf. When coming to the netwrorkign part i have two network cards one is connected to the Internal LAN and the other NIC is connected to my ISP router.

The way I designed to test netscaler for testing the external access.

The want to separate out all this so that I can have one VLAN for my management, vmotion, NAS etc.

Is that possible with two network cards and a CISCO L3 switch?

Please advise,

Many Thanks,

Pavan

Hey Pavan,

Yeah it is possible. You just need to tag multiple VLANs to the port group that connects to your ESXi host NIC. I would connect the ISP router to your Cisco switch, like I did. If you have a look at the network layout diagram you can see what I have done. You can set something up similar.

In regards to your NAS, it seems you only have a two bay NAS that supports RAID 1 only. RAID 1 is mirroring of data, which is good for redundancy, as you can still access your data if one hard disk fails, however it has slow write performance as it needs to write twice (to both disks). Read performance is good as it will read from both disks.

If you are looking for just performance and not redundancy, I would just set each disk as a separate VLAN and not worry about RAID 1.

Hope this helps

Luca

hi lucas,

can you tell wich rack this is?

thanks

Hi Simon,

Sorry its not a brand rack, it just a rack I got from a local company here in Australia.

Luca

Alex / Hi,Can i know how you managed to get ESXi5 rnuinng on yoru system? I am rnuinng E8500 & Asus P5Q Pro.When i tried to do a ESXi5 inside vmware workstation, it throws out a error1) This system is only rnuinng on 1 core when E8400 is dual-core.2) There is no virtualization in the BIOS. This is not possible when i have already enabled Intel VT.Any ideas? I am pulling my hair over this issue.regards,Alex

Hi Alex,

I am not running ESXi in VMware Workstation, I am running it natively. If you need to install ESXi 5.5 on an Asus motherboard, have a look at my article here >>> Installing ESXi 5.5 on an Asus Gryphon Z97.

Also, just to let you know when I installed ESXi 6.0 on the same system, I didn’t need to do anything as all of the drivers where already available by default within ESXi 6.0. You might want to give ESXi 6.0 a go because their driver support has significantly improved.

Hope this helps

Luca

considered putting that Airport Extreme outside of your rack? I used too have a flat one like that that I just stuck to the side of it with velcro tapes. Moved on to the newer ones with 802.3ac, so now one is situation on top.

Hi Karsten,

My one is just a TimeCapsule, not actually a wifi router. My wifi router is sitting on top of my rack, on the outside, similar to your solution.

Thanks for the idea though.

Luca